Jul

02

Parsing Large JSON with NodeJS

Recently I was tasked with parsing a very large JSON file with Node.js Typically when wanting to parse JSON in Node its fairly simple. In the past I would do something like the following.

Or even simpler with a require statement like this

Or even simpler with a require statement like this

Both of these work great with small or even moderate size files, but what if you need to parse a really large JSON file, one with millions of lines, reading the entire file into memory is no longer a great option.

Because of this I needed a way to “Stream” the JSON and process as it went. There is a nice module named 'stream-json' that does exactly what I wanted.

With stream-json, we can use the NodeJS file stream to process our large data file in chucks.

Both of these work great with small or even moderate size files, but what if you need to parse a really large JSON file, one with millions of lines, reading the entire file into memory is no longer a great option.

Because of this I needed a way to “Stream” the JSON and process as it went. There is a nice module named 'stream-json' that does exactly what I wanted.

With stream-json, we can use the NodeJS file stream to process our large data file in chucks.

Now our data can process without running out of memory, however in the use case I was working on, inside the stream I had an asynchronous process. Because of this, I still was consuming huge amounts of memory as this just up a very large amount of unresolved promises to keep in memory until they completed.

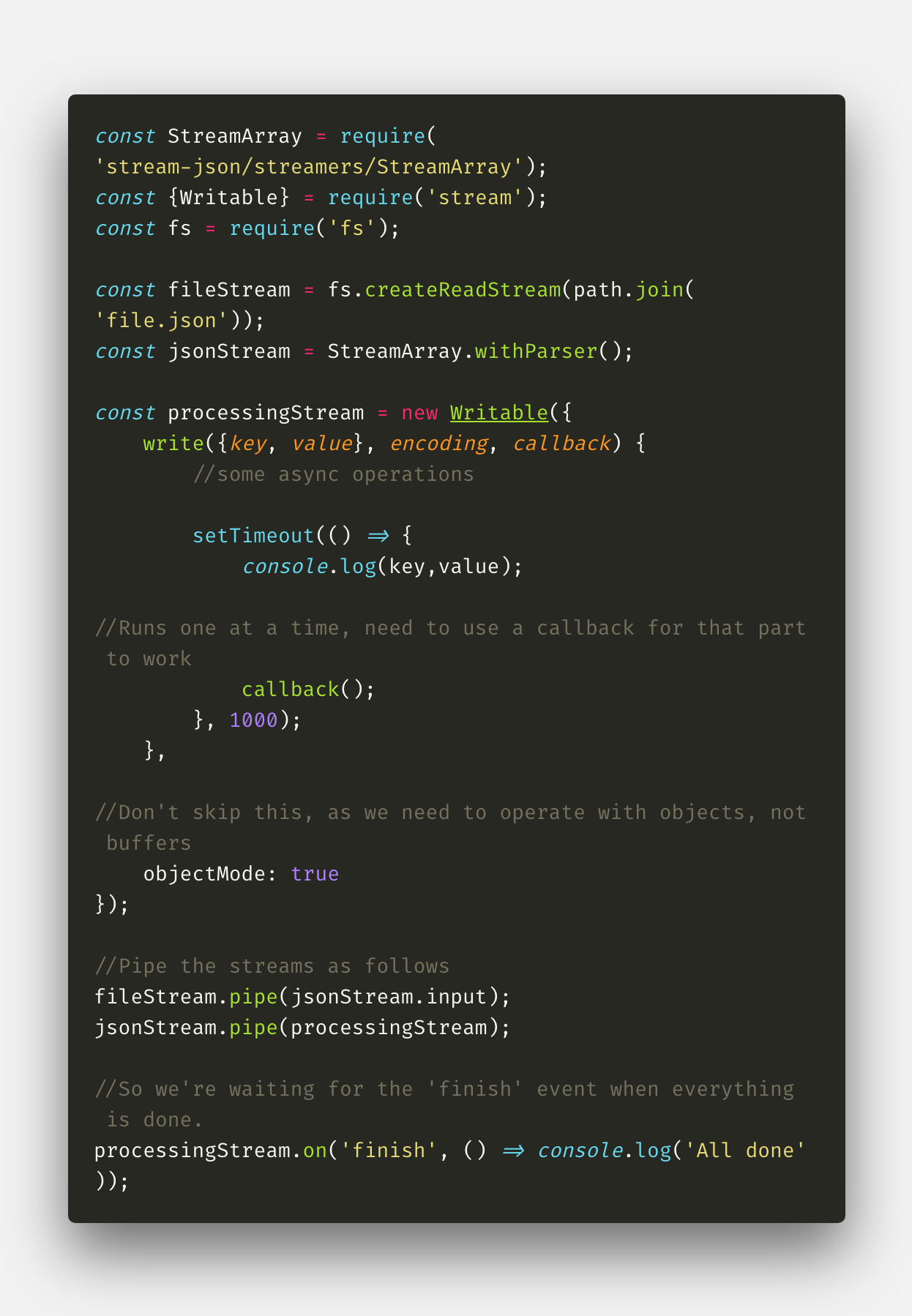

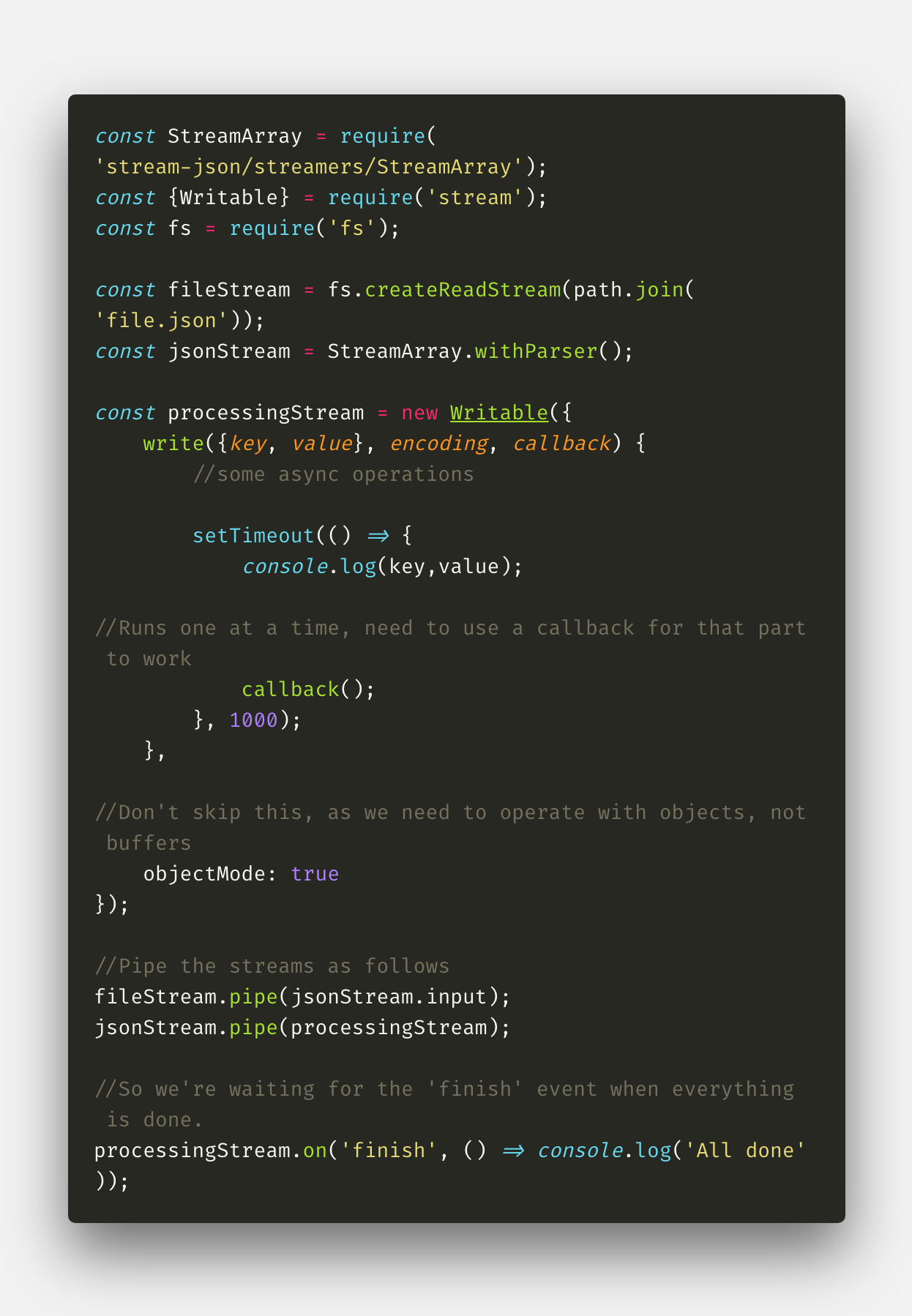

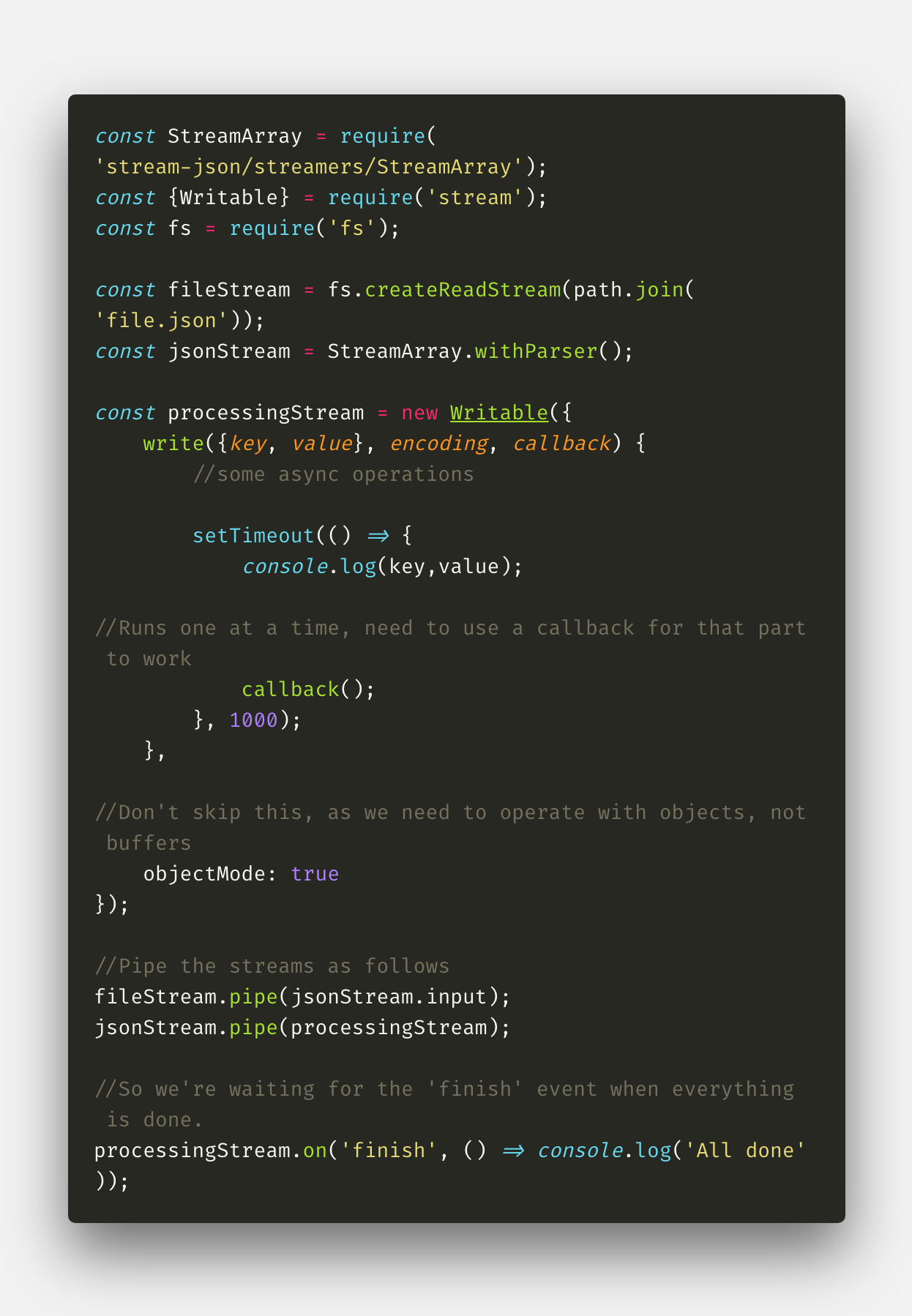

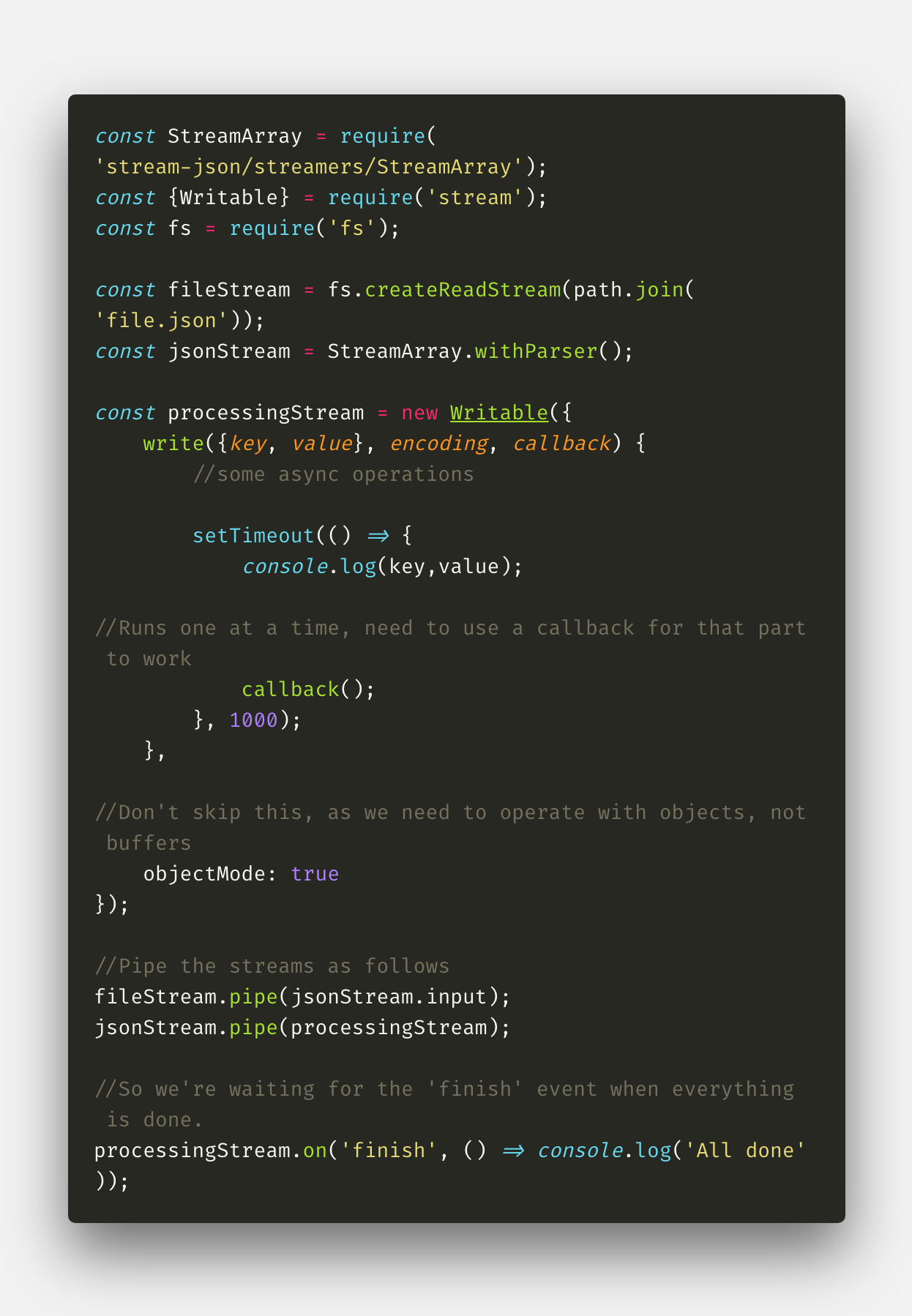

To solve this I had to also use a custom Writeable stream like this.

Now our data can process without running out of memory, however in the use case I was working on, inside the stream I had an asynchronous process. Because of this, I still was consuming huge amounts of memory as this just up a very large amount of unresolved promises to keep in memory until they completed.

To solve this I had to also use a custom Writeable stream like this.

The Writeable stream also allows each asynchronous process to complete and the promises to resolve before continuing on to the next, thus avoiding the memory backup.

This stack overflow is where I got the examples for this post.

https://stackoverflow.com/questions/42896447/parse-large-json-file-in-nodejs-and-handle-each-object-independently/42897498

Also note another thing I learned in this process is if you want to start Node with more than the default amount of RAM you can use the following command.

The Writeable stream also allows each asynchronous process to complete and the promises to resolve before continuing on to the next, thus avoiding the memory backup.

This stack overflow is where I got the examples for this post.

https://stackoverflow.com/questions/42896447/parse-large-json-file-in-nodejs-and-handle-each-object-independently/42897498

Also note another thing I learned in this process is if you want to start Node with more than the default amount of RAM you can use the following command.

By default the memory limit in Node.js is 512 mb, to solve this issue you need to increase the memory limit using command --max-old-space-size. This can be used to avoid the memory limits within node. The command above would give Node 4GB of RAM to use.

By default the memory limit in Node.js is 512 mb, to solve this issue you need to increase the memory limit using command --max-old-space-size. This can be used to avoid the memory limits within node. The command above would give Node 4GB of RAM to use.

Or even simpler with a require statement like this

Or even simpler with a require statement like this

Both of these work great with small or even moderate size files, but what if you need to parse a really large JSON file, one with millions of lines, reading the entire file into memory is no longer a great option.

Because of this I needed a way to “Stream” the JSON and process as it went. There is a nice module named 'stream-json' that does exactly what I wanted.

With stream-json, we can use the NodeJS file stream to process our large data file in chucks.

Both of these work great with small or even moderate size files, but what if you need to parse a really large JSON file, one with millions of lines, reading the entire file into memory is no longer a great option.

Because of this I needed a way to “Stream” the JSON and process as it went. There is a nice module named 'stream-json' that does exactly what I wanted.

With stream-json, we can use the NodeJS file stream to process our large data file in chucks.

Now our data can process without running out of memory, however in the use case I was working on, inside the stream I had an asynchronous process. Because of this, I still was consuming huge amounts of memory as this just up a very large amount of unresolved promises to keep in memory until they completed.

To solve this I had to also use a custom Writeable stream like this.

Now our data can process without running out of memory, however in the use case I was working on, inside the stream I had an asynchronous process. Because of this, I still was consuming huge amounts of memory as this just up a very large amount of unresolved promises to keep in memory until they completed.

To solve this I had to also use a custom Writeable stream like this.

The Writeable stream also allows each asynchronous process to complete and the promises to resolve before continuing on to the next, thus avoiding the memory backup.

This stack overflow is where I got the examples for this post.

https://stackoverflow.com/questions/42896447/parse-large-json-file-in-nodejs-and-handle-each-object-independently/42897498

Also note another thing I learned in this process is if you want to start Node with more than the default amount of RAM you can use the following command.

The Writeable stream also allows each asynchronous process to complete and the promises to resolve before continuing on to the next, thus avoiding the memory backup.

This stack overflow is where I got the examples for this post.

https://stackoverflow.com/questions/42896447/parse-large-json-file-in-nodejs-and-handle-each-object-independently/42897498

Also note another thing I learned in this process is if you want to start Node with more than the default amount of RAM you can use the following command.

By default the memory limit in Node.js is 512 mb, to solve this issue you need to increase the memory limit using command --max-old-space-size. This can be used to avoid the memory limits within node. The command above would give Node 4GB of RAM to use.

By default the memory limit in Node.js is 512 mb, to solve this issue you need to increase the memory limit using command --max-old-space-size. This can be used to avoid the memory limits within node. The command above would give Node 4GB of RAM to use.

0

0